Human-Robot Interaction

Robot Perception and Decision-Making Algorithms

Human Group Detection & Tracking Algorithms

As mobile robots work side-by-side with their human teammates, they require computer vision techniques that enable them to perceive groups. To achieve this goal, we develop computer vision algorithms for mobile robots (i.e., robot vision) operating in real-world environments to enable them to sense and perceive people around them and make sense of their actions. Our robot vision algorithms are robust to real-world vision challenges such as occlusion, camera egomotion, shadow, and varying lighting illuminations. We hope this work will enable the development of robots that can more effectively locate and perceive their teammates, particularly in uncertain, unstructured environments. [HRI 2022, RCAR 2024, THRI2020]

MARLHospital: A Multi-agent Reinforcement Learning Simulation Environment for Medical Decision-Making

Hospital teams in the emergency ward are usually made up of experts with different skill sets and specialties. In such a diverse team of healthcare workers, real-world events like role switching between healthcare workers (HCWs) during tasks, assigning tasks toHCWs outside their specialities due to insufficient human resources, and task dependencies pose significant challenges that could hinder optimal patient outcomes due to time delays. In this research, we introduce the MARLAgentHospital as a benchmark environment to provide a systemic evaluation of different classes of MARL algorithms (independent learning and value decomposition) and quantify the effects of changes in team dynamics, roles and skills in safety-critical environments. We demonstrate the effectiveness of our proposed framework in quantifying care delays due to agents skill levels and expertise in team settings. We formulate multi-agent reinforcement learning (MARL) Markov games for medical tasks in the emergency room. Our results show that value decomposition algorithms outperform independent learning algorithms.

Multi-Agent Robotic Systems

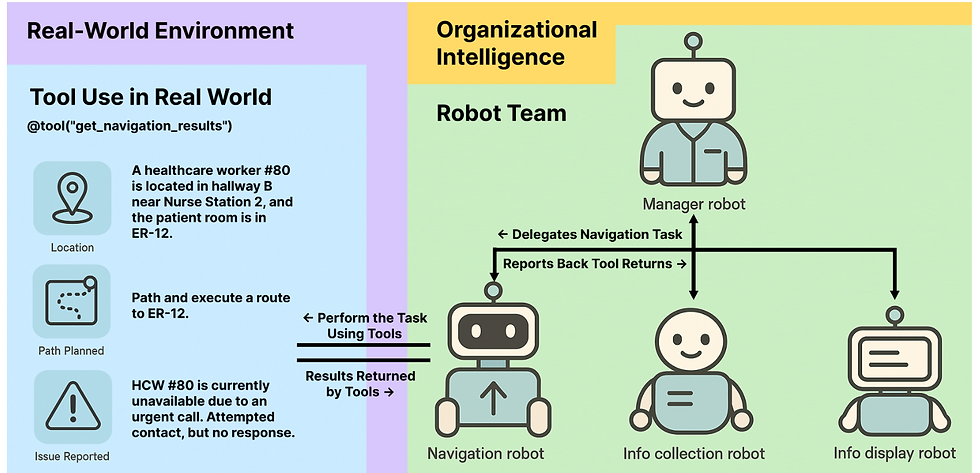

Multi-agent robotic systems (MARS) build upon multi-agent systems by integrating physical and task-related constraints, increasing the complexity of action execution and agent coordination. However, despite the availability of advanced multi-agent frameworks, their real-world deployment on robots remains limited, hindering the advancement of MARS research in practice. To bridge this gap, we conducted two studies to investigate performance trade-offs of hierarchical multi-agent frameworks in a simulated real-world multi-robot healthcare scenario.

In the first study, we iteratively refine the knowledge base of MARS using CrewAI, to systematically identify and categorize coordination failures (e.g., tool access violations, lack of timely handling of failure reports) not resolvable by providing contextual knowledge alone. In a second study, we evaluate a redesigned bidirectional communication structure using AutoGen and further measure the trade-offs between reasoning and non-reasoning models operating within the same robotic team setting. Drawing from our empirical findings, we emphasize the tension between autonomy and stability and the importance of edge-case testing to improve system reliability and safety for future real-world deployment. [Project Page]

Acuity-Aware Robot Navigation in Emergency Rooms

The emergency department (ED) is a safetycritical environment in which healthcare workers (HCWs) are overburdened, overworked, and have limited resources, especially during the COVID-19 pandemic. One way to address this problem is to explore the use of robots that can support clinical teams, e.g., to deliver materials or restock supplies. However, due to EDs being overcrowded, and the cognitive overload HCWs experience, robots need to understand various levels of patient acuity so they avoid disrupting care delivery. In this paper, we introduce the Safety-Critical Deep Q-Network (SafeDQN) system, a new acuity-aware navigation system for mobile robots. SafeDQN is based on two insights about care in EDs: high-acuity patients tend to have more HCWs in attendance and those HCWs tend to move more quickly. We compared SafeDQN to three classic navigation methods, and show that it generates the safest, quickest path for mobile robots when navigating in a simulated ED environment. We hope this work encourages future exploration of social robots that work in safety-critical, human-centered environments, and ultimately help to improve patient outcomes and save lives. [ICRA2019, R-AL2023, AAAI2020]